Cranfield University

Projects running at Cranfield University. For further details contact Stephen Hallett.

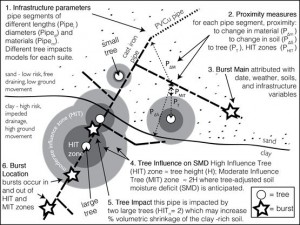

The uninterrupted supply and reliable distribution of drinking water is fundamental in a modern society. Buried water infrastructure is subject to a range of operational and environmental factors which can lead to asset failure. For the privatised water-sector in the UK, utility companies are moving towards the deployment of statistical-based models for proactive asset management. For some companies, statistical-based models have facilitated the migration away from static annual burst targets, to targets which are dynamic and adjusted to observed environmental conditions. There is an increasing need for the development of accurate pipeline failure prediction models to support these asset management and regulation activities. This research evaluates several quantitative measures to improve current methods of pipeline failure prediction. The aim of this research is to establish the impact of soils, weather and trees to water infrastructure failure and to develop a series of material-specific drinking water pipeline failure models for an entire distribution network.

A quantitative assessment into the impact of data cleaning to the model error of a series of previously developed models was conducted. Material-specific variable selection and step-wise modelling approaches were used to construct a series of improved statistical models which have an increased representation of the environmental factors leading to pipeline failure. A detailed national tree inventory was investigated for its use in enhancing pipeline failure predictions and for calculating failure rates of different pipe materials under varying soil shrink swell and tree density conditions. The value in creating separate winter and summer pipeline failure models was also evaluated, to increase representation of the highly seasonal nature of pipeline failure. Finally, a novel satellite-based approach to generate soil-related land surface deformation measurements across a regional area was investigated. The result is a series of enhanced statistical-based methods of water pipeline failure prediction and a greater understanding into the environmental drivers leading to asset failure.

Keywords: Statistical modelling; pipeline failure; prediction; environmental risk; water utilities

Student: Matthew North

First supervisor: Dr Timothy Farewell

Email: t.s.farewell@cranfield.ac.uk

Commenced: October 2015; Completed, passed and graduated

Supervisory panel: Cranfield: Dr Timothy Farewell; Dr Stephen Hallett | Cambridge: Dr Mike Bithell | Industrial partners: Anglian Water plc.; Bluesky Ltd.

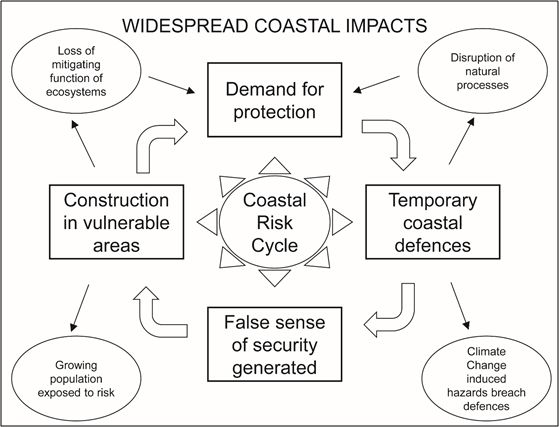

Coastal regions are some of the most exposed to environmental hazards, yet the coast is the preferred settlement site for a high percentage of the global population, and most major global cities are located on or near the coast. This research adopts a predominantly anthropocentric approach to the analysis of coastal risk and resilience. This centres on the pervasive hazards of coastal flooding and erosion. Coastal management decision-making practices are shown to be reliant on access to current and accurate information. However, constraints have been imposed on information flows between scientists, policy makers and practitioners, due to a lack of awareness and utilisation of available data sources. This research seeks to tackle this issue in evaluating how innovations in the use of data and analytics can be applied to further the application of science within decision-making processes related to coastal risk adaptation. In achieving this aim a range of research methodologies have been employed and the progression of topics covered mark a shift from themes of risk to resilience. The work focuses on a case study region of East Anglia, UK, benefiting from input from a partner organisation, responsible for the region’s coasts: Coastal Partnership East.

An initial review revealed how data can be utilised effectively within risk-based coastal decision-making practices, highlighting scope for application of advanced Big Data techniques to the analysis of coastal datasets. The process of risk evaluation has been examined in detail, and the range of possibilities afforded by open source coastal datasets were revealed. Subsequently, open source coastal terrain and bathymetric, point cloud datasets were identified for 14 sites within the case study area. These were then utilised within a practical application of a geomorphological change detection (GCD) method. This revealed how analysis of high spatial and temporal resolution point cloud data can accurately reveal and quantify physical coastal impacts. Additionally, the research reveals how data innovations can facilitate adaptation through insurance; more specifically how the use of empirical evidence in pricing of coastal flood insurance can result in both communication and distribution of risk.

The various strands of knowledge generated throughout this study reveal how an extensive range of data types, sources, and advanced forms of analysis, can together allow coastal resilience assessments to be founded on empirical evidence. This research serves to demonstrate how the application of advanced data-driven analytical processes can reduce levels of uncertainty and subjectivity inherent within current coastal environmental management practices. Adoption of methods presented within this research could further the possibilities for sustainable and resilient management of the incredibly valuable environmental resource which is the coast.

Keywords: Coastal management, resilience, risk-based decision-making, data analytics, open source data, insurance, geomorphological change detection, GIS, Big Data

Student: Alexander Rumson

First supervisor: Dr Stephen Hallett

Commenced: October 2015

Supervisory panel: Cranfield Dr Stephen Hallett; Tim Brewer | Industrial partner East Suffolk Council; Anna Harrison, British Geological Survey

The day-to-day dynamics of groundwater behaviour are driven by local weather and recharge patterns, but previous research has identified that there are also significant temporal relationships large scale ocean-atmosphere conditions. This research seeks to expand on this earlier work by combining advanced statistical methods, extensive spatiotemporal (including climatological, meteorological, hydrogeological) datasets and groundwater modelling to further analyse these relationships so as to be able to better predict and manage groundwater level response to extreme events. The research focuses on a large dataset of long term groundwater levels records from boreholes across the UK (and more broadly in Europe where available)  to understand the differences in sensitivity between different aquifers or climatological areas. The student is being supported by an experienced supervisory team from Cranfield and Birmingham universities and the British Geological Survey and will be provided by extensive training within the DREAM programme’s Advanced Technical Skills and Transferable Skills and Leadership training.

to understand the differences in sensitivity between different aquifers or climatological areas. The student is being supported by an experienced supervisory team from Cranfield and Birmingham universities and the British Geological Survey and will be provided by extensive training within the DREAM programme’s Advanced Technical Skills and Transferable Skills and Leadership training.

Student: William Rust

First supervisor: Professor Ian Holman

Email: i.holman@cranfield.ac.uk

Telephone: +44 (0) 1234 758277

Commenced: October 2015

Supervisory panel: Cranfield: Professor Ian Holman; Dr Ron Corstanje | Birmingham: Dr Mark Cuthbert | Industrial partner: British Geological Survey

This research will use a case study approach to develop a new generation of data-oriented informatic tools for the management of the complex and multiple data underpinning total expenditure decision-making in the water utility sector. The proposed programme of research will focus on a comparative evaluation of existing multi-stakeholder tools for asset management planning and will develop a series of analytical tools, exploring contrasting technical and software approaches. The research will lead to an understanding of the range and applicability of multivariate analytic tools, and will ultimately assess how these complex outputs may be visualised such that customer-driven outcomes are derived and management decision-making is supported. Data will be drawn from case studies drawing on the Atkins UK and EU water industry networks.

Specific case studies for this research will derive from the UK Chemical Investigations Programme (CIP) (£35m) research innovation programme (2010-2013), managed by Atkins and informing UK and EU chemical regulation under the Water Framework Directive (https://www.ukwir.org/site/web/news/news-items/ukwir-chemicals-investigation-programme). Working with Defra, UK Environment Agency, UK Water Industry and Ofwat, and in response to emergent legislation on surface water quality, CIP sought to gain better understanding of the occurrence, behaviour and management of trace contaminants in wastewater treatment process and in effluents. CIP has generated a vast and varied body of data that now paves the way in this study for the development of tools for rational prioritisation of future actions, supporting a transparent and informed discussion of the available options required to manage trace substances in the water environment. Further to this, Atkins are also now supporting the UKWIR ‘follow-on’ programme (£120m) of UK innovation, embracing environmental challenges, technological development and economic decision-making. Additional case studies can draw from this programme, providing a basis for further integrated analytical tools. The outline for multivariate analysis tools linked to visualisation of outputs in this arena will be used to inform outcomes and to explore opportunities in the water sector.

A unique aspect of this project is that it will seek to inform the UK water industry as to the options and opportunities for meeting EU and UK environmental drivers and, due to its economic implications, it will also inform the introduction of competition into the UK water market. Therefore the proposed case studies approach should prove of significant importance to the UK on both an environmental and economic level.

Flood modelling and forecasting are essential tools to inform infrastructure and emergency planning. Accurate forecasts, though, are difficult to achieve, even in developed countries with decades of experience and detailed topographical and hydrological datasets for calibration and validation, as demonstrated by the recent Cumbria floods. Forecasting is even more challenging in large tropical regions, which have limited data availability (e.g. few river gauging stations), and modest meteorological forecasting capabilities. More worryingly, their large human populations, economically-important industries (e.g. oil/gas, agriculture), and ecologically-important habitats mean that flooding is connected to multiple other significant risks.

This PhD project will attempt to overcome some of these challenges by (1) using new near real-time, high resolution satellite datasets to improve the medium- and short-term flood risk assessment generated by probabilistic ensemble flood forecasting for data-poor tropical regions, and (2) applying the flood model to the assessment of flood-induced pollution risk. The case study for the project will be the Mexican State of Tabasco, which occupies a large, low-lying, topographically-complex area that experiences flooding from several large rivers (e.g. Grijalva-Usumacinta systems, 1,911 km long network, 128,390 km2 catchment area) which are affected by weather systems from both the Pacific Ocean and Caribbean Sea. The State is home to a large, economically-important on- and off-shore oil industry, which is both impacted by the flooding and a source of significant pollution risk during floods. Consequently, accurate flood forecasts are needed to advise the population, protect or relocate sensitive oil extraction and refining infrastructure, and to assess the risk of pollutant mobilisation (i.e. oil and associated chemicals) which could significantly impact water quality, agriculture or sensitive ecological habitats.

The researcher is working closely with academics at the Universidad Juaréz Autónoma de Tabasco (UJAT), who have offered additional support for the project to allow the student to spend a significant amount of time in Mexico (2-3 months per year) and to access new high-resolution, 1-day return period satellite data. The project will have a direct and immediate impact on flood and pollution risk management in Tabasco, as the PhD outputs will feed into UJAT’s development of an operational water risk management system for the State.

First supervisor: Dr Bob Grabowski

Email: r.c.grabowski@cranfield.ac.uk

Telephone: +44 (0) 1234 758360

Commenced: October 2016

Supervisory panel: Cranfield: Dr Bob Grabowski; Tim Brewer | Partner: University of Tabasco, Mexico

Sustainable intensification of agriculture is a vital approach to maintain the balance between providing food for a growing global population and preserving soil as a valuable resource. Currently the state of arable intensive land is poor and the use of organic amendments such as compost, farm yard manure and other agricultural residues can provide soil organic matter required to restore soil health. Organic amendments also contain nutrients needed by crops but its availability needs to be determined accurately to meet crop demands. At the moment, samples need to be sent to laboratories for nutrient analysis and farmers need to consider it before accurately applying it to land. However in practice this does not happen and farmers bulk apply the organic amendments without fully considering its nutrient content. Bulk application of organic amendments whilst can build soil organic matter, its nutrient when becomes available and in excess of crop demands can pose a risk to contaminate the environment. A solution to this challenge would be development of an in-field diagnostic tool that can be used to determine the nutrient content of organic amendments in an accurate and precise manner.

This PhD opportunity offers an exciting and challenging offer to a suitable candidate to develop an in-field diagnostic tool. At Cranfield University, there has been some initial proof of concept work being developed which will under pin this project. In this project there will be contribution from two industrial sponsors (AKVO www.akvo.org, and the World Vegetable Centre www.avrdc.org) who have access to several thousands of field sites in Vietnam and Cambodia where data from soil and organic amendment samples will be collected and the use of in-field diagnostic tool will be developed and validated. The principles behind development of this tool is to produce a mobile phone App that can be used to determine the nutrient content of organic amendments in developing countries where access to laboratories are limited. The aspiration is to develop, use and validate this tool from a large dataset to minimise risk from over applying organic amendments.

Please contact Dr Ruben Sakrabani (Cranfield):Email: r.sakrabani@cranfield.ac.uk

Telephone: +44 (0) 1234 75 8106

Supervisory panel: Dr Ruben Sakrabani (Cranfield); Dr Stephen Hallett (Cranfield)

Industrial partner: Mr Joy Ghosh (AKVO) joy@akvo.org, and Mr Stuart Brown (World Vegetable Centre) stuart.brown@worldveg.org

Biological air pollution (bioaerosols) are airborne microorganisms, particularly fungi and bacteria. Bioaerosols from composting facilities have the potential to cause health impacts and are regulated by the Environment Agency. People living near composting facilities are concerned about the impacts on their health. Current monitoring methods use spot measurements and so only provide an indication of concentrations for the particular short-term measurement period. New and novel methods for monitoring bioaerosols are being tested. These newly emerging measurement techniques have the potential to radically increase the amount and extent of data collected on bioaerosol.

This exciting project provides the opportunity to work with the Environment Agency, to explore innovative methods of collating, analysing and interpreting different sources of bioaerosol data to produce new insights and risk maps. These will provide new insights into how composting can be managed for the benefit of local citizens. This project will also work with interested parties, such as the Environment Agency, local authorities and businesses, to understand the perceptions and opinions of impacts from composting facilities, for example, whether they are actively supportive of composting, unaware and uninterested in its developments and/or significantly opposed to it? All the results will be used to create a toolkit to communicate the risks of bioaerosols, focussing on how the uncertainties are explained and managed. In addition to working with the Environment Agency, this PhD student will work within a supportive team of researchers working on waste management and bioaerosol science.

Student: Martina Della CasaPlease contact Dr Gill Drew:

Email: g.h.drew@cranfield.ac.uk Telephone: +44 (0) 1234 750111 x2792

Supervisory panel: Dr Gill Drew (Cranfield); Dr Kenisha Garnett (Cranfield); Dr Iq Mead (Cranfield)

Industrial partners: Rob Kinnersley (EA) rob.kinnersley@environment-agency.gov, and Kerry Walsh (EA) kerry.walsh@environment-agency.gov.uk

The problem: Vast amounts of clean water is lost from the water supply network each year. Ageing pipes often fail as a result of soil corrosivity, or the seasonal shrink-swell cycle of clay soils. Soil spatial distribution is complex and existing soil maps[1], while useful, do not provide sufficient detail to identify vulnerable water network segments for upgrade.

This research will address these problem through delivering three components:

- Creation of new, detailed soil hazard maps, predicting soil corrosivity and shrink-swell potential at 50m resolution.

- Development of enhanced pipe failure models which predict where and when pipes will fail on the basis of soil, weather and infrastructure parameters.

- Advising Anglian Water on which pipes to upgrade to reduce leakage, energy use and customer interruptions.

Through the research, developing and using high resolution predictive soil maps[1],[3] and burst models, water companies will be able to better identify, and upgrade, vulnerable parts of their networks. The many resulting benefits from your work will include a reduction in leakage, reduction in energy use and reduction in interruptions to customer supplies.

Our climate is changing[2]. Hotter drier summers cause issues for water supply, and the chaotic weather patterns cause havoc with traditional infrastructure modelling. The old approach of comparing infrastructure performance with previous months or years no longer is fit for purpose. THis project will be closely aligned to industry, seeking to better our understanding of the dynamic interactions between soil, weather and infrastructure. Through the research, we will be able to improve our ability to benchmark and improve network performance, developing new digital soil mapping techniques to enhance our understanding of the soil. The outputs will be integrated into predictive burst models, and used to provide guidance to Anglian Water on where it can best target it’s financial investments, so they can reduce leakage to a negligible level.

[1] LandIS – the Land Information System (www.landis.org.uk) [2] Bluesky National Tree Map (www.blueskymapshop.com/products/national-tree-map) [3] Met Office 3 hourly forecast data: (www.metoffice.gov.uk/datapoint/product/uk-3hourly-site-specific-forecast) Student: Giles MercerPlease contact Dr Timothy Farewell (Cranfield), Senior Research Fellow in Geospatial Informatics

Email: t.s.farewell@cranfield.ac.uk

Telephone: +44 (0) 1234 752978

Industrial partner: Anglian Water

Information on illicit poppy cultivation in Afghanistan is of critical importance to the opium monitoring programme of the United Nations Office on Drugs and Crime (UNODC). The pattern of cultivation is constantly evolving because of environmental pressures, such as water availability, and social and economic drivers related to counter narcotics activity. Remote sensing already plays a key role in gathering information on the area of opium cultivation and its spatial distribution.

The shift to cloud computing opens up exciting possibilities for extracting new information from the huge amounts of satellite data from long-term earth observation programmes. You will test the hypothesis that inter-annual and intra-seasonal changes in vegetation growth cycles are predictors of poppy cultivation risk. This will involve using emerging cloud based technologies for processing image data into accurate and timely information on vegetation dynamics relating to opium cultivation. The research will be conducted in collaboration with the UNODC.

Student: Alex HamerPlease contact Dr Toby Waine (Cranfield):

Email: t.w.waine@cranfield.ac.uk

Telephone: +44 (0) 1234 750111 x 2770

Supervisory panel: Dr Toby Waine (Cranfield); Dr Dan Simms (Cranfield)

Industrial partner: United Nations Office on Drugs and Crime, Vienna

Landscape is the arena in which natural capital (providing ‘supporting’, ‘provisioning’, ‘regulating’ and ‘cultural’ ecosystem goods and services) interacts with elements of the other four 'capital's' to create the real places that people inhabit, derive benefits from and care about. Throughout time, landscapes change as a result of natural processes but the rate of change is now orders of magnitudes greater due to anthropogenic activity. Thus key questions facing those organisations involved in landscape management and policy include:

- Is the rate of landscape change taking place today sustainable in terms of conserving the diversity of character and functions? and critically,

- How resilient are England’s landscapes to change?

Natural England is one such organisation, responsible for delivering the Government Agenda in this field. Conservation 21 – Natural England’s conservation strategy for the 21st Century, places emphasis on ‘resilient landscapes’. Significantly they argue that ‘resilient’ landscapes must be both ecologically and culturally resilient (implying culturally valued/supported/voted-for etc.), highlighting that landscapes lacking cultural resilience are unlikely to be ecologically resilient in the long term.

Working in partnership with Natural England’s Strategy Team, this exciting studentship will investigate the use of big data relating to natural and social sciences, together with, for example Virtual Reality visualisation and big data techniques, to provide an holistic, integrated analysis of ecosystem service provision as experienced through society’s perception of the changing landscapes around them and in a way that secures assessment of ecological and cultural aspects of the management of the natural environment equally. This will then enable development of management and intervention aimed at enhancing natural capital, ecosystem goods and services in their cultural context, by testing different scenarios. The studentship will be based in the Cranfield Institute for Resilient Futures and make extensive use of the NERC funded Ecosystem Services Databank and Visualisation for Terrestrial Informatics Laboratory, which includes a portable Virtalis VR system and GeoVisionary software.

Student: Matthew WebbPlease contact Dr Simon Jude

Email: s.jude@cranfield.ac.uk

Supervisory panel: Dr Simon Jude (Cranfield) and further details on Simon; Tim Brewer (Cranfield).

The project will involve close collaboration with Natural England.

The future is already here — it’s just not very integrated… Currently around 50% of the world’s population lives in cities, this will grow to 75% by 2050. The OECD estimate it will cost $45 trillion USD between now and 2050 to upgrade infrastructure in our towns and cities across the world whilst also to managing the impact of climate change.

Here in the UK, the Government for example is targeting a 33% cost reduction across the whole life of assets by 2025, what’s more they are demanding 50% reduction in time and 50% reduction in greenhouse gas emissions.

The infrastructures of our cities; buildings, roads, power, water, internet are increasingly interconnected and Digital Engineering will transform the way in which construction projects are conceived, planned, executed – and the way in which infrastructure assets are created, operated and used by citizens. 3D object modelling, laser scanning, drones, augmented reality and the internet of things are just some of the technological innovations which are already transforming infrastructure systems. Working with Atkins, a global cross-sector infrastructure design consultancy, this project will understand how enhanced social, economic and environmental outcomes can be achieved by the adoption of Digital Transformative Technologies and protocols. Research methods will be developed to determine where city infrastructure system integration is appropriate, how metrics and measures of benefits may be established and consequently indicate how optimal, integrated infrastructure systems management could be developed. Appropriate integrated cross-sector case studies will be developed to demonstrate the findings and provide real world context.

The project will include opportunities for secondment to work alongside Atkins staff to gain a valuable first hand experience of working in this sector. The wider DREAM CDT offers an exciting range of training and support to develop both technical and personal skills.

Student: Avgousta StanitsaPlease contact Dr Stephen Hallett DREAM CDT Director Email: s.hallett@cranfield.ac.uk

Telephone: +44 (0) +44 1234 750111 x2750

Industrial partner: Dr Arthur Thornton, Associate Director: Infrastructure, Atkins Global plc., Epsom office, KT18 5BW

Telephone: +44 (0)1226370233

Email: arthur.thornton@atkinsglobal.com

If you want to help save water, save energy and save the loss of water supply to thousands of people through the use of environmental data science, read on. The problem: Water pipes often fail as a result of weather conditions, such as cold weather events, or rapid changes in soil moisture deficit. Because water companies do not know where or when to expect burst pipes, they do not respond as fast as they would like. This means that valuable water is lost, the energy used to treat that water is wasted, and people are without water, sometimes for sustained periods. Where you come in: Through your research you will predict where and when pipes will fail, enabling water companies to be on hand to respond more quickly to burst water mains. There are three main stages to this PhD. You will:

- Develop and test back-looking high resolution predictive burst models based on soil[1], weather and infrastructure parameters.

- Develop and test forward-looking predictive models of burst locations for the coming week, using available forecast data.

- Work with Anglian Water to build an early warning system which identify areas of the network to prioritise for maintenance in the coming week

Your work will result in a new data tool which will enable Anglian Water to predict where and when its pipes should burst given the predicted weather conditions. This will enable the water utility to more quickly respond to bursts as they will have a better understanding of where the bursts are likely to occur. You will be working in an active, and truly supportive research team. We are closely aligned to industry, and seek to better our understanding of the dynamic interactions between soil, weather and infrastructure.

Through the course of this PhD you will develop big data skills which enable you to clean, process and use vast datasets ranging from 15 minute interval pipe pressure management data, 3 hourly weather forecast data[3] and more static datasets which describe the soil1, vegetation[2] and infrastructure parameters. Unlike some PhD projects, this project seeks to solve a real world problem. As well as being academically stimulating, the project has industrial backing. You will discuss and integrate your research with front-line infrastructure operators, so they can improve their service through the use of your results.

By the end of the PhD programme you will have developed highly transferable skills to become a leading force in environmental data science, You will have developed a network of industry contacts to ensure your science is relevant enough to bring lasting benefit. These contacts and skills will also open the doors to rewarding and stimulating careers in industry and academia. No one wants to be stuck in a boring, irrelevant, job. This PhD offers you a chance to develop skills and relationships to help ensure you never will be.

If using environmental science to better the management of our limited natural resources excites you, we strongly encourage you to apply for this PhD.

[1] LandIS – the Land Information System (www.landis.org.uk) [2] Bluesky National Tree Map (www.blueskymapshop.com/products/national-tree-map) [3] Met Office 3 hourly forecast data: (www.metoffice.gov.uk/datapoint/product/uk-3hourly-site-specific-forecast) Please contact Dr. Timothy Farewell (Cranfield), Senior Research Fellow in Geospatial InformaticsEmail: t.s.farewell@cranfield.ac.uk

Telephone: +44 (0) 1234 752978

Industrial partner: Anglian Water

The South East of the UK is one of the densely-populated areas of Europe. The demands on infrastructure, water needs and urban development place particular pressures on greenspace and natural environment. What is still missing are a set of tools which can assess the impact of these planned developments on the natural environment and which will allow decision makers evaluate the possible benefits as well as impacts of particular development schemes (e.g. greenspaces, eco developments, better water infrastructure). This PhD will seek to develop these tools, describing the changes in ecosystem goods and services as various development schemes or infrastructure plans are assessed. For these tools to be practical and useful to industry, they need be both reflective of the underlying natural processes but also easy and time efficient in their use. Interacting closely with our Industrial partners, the PhD will apply these tools to current development schemes in the South East, ensuring that the research is exciting, current and immediately applicable to contemporary societal needs.

Please contact Professor Ron Corstanje (Cranfield), Professor of Environmental Data Science Head of Centre, Centre for Environmental and Agricultural Informatics (CEAI)Email: roncorstanje@cranfield.ac.uk

Cranfield University Supervisors: Professor Ron Corstanje (Cranfield), Professor Jim Harris FRSB, FIAgrE

Industrial partner: Mott MacDonald

Background: Currently, food quality and safety controls relies heavily on regulatory inspection and sampling regimes. Such approaches are often based on conventional chemical and microbiological analysis, making the ultimate goal of 100% real-time inspection technically, financially and logistically impossible.

Over the past decade, rapid non-invasive techniques (e.g. vibrational spectroscopy, hyperspectral / Multispectral imagining) started gaining popularity as rapid and efficient methods for assessing food quality, safety and authentication; as a sensible alternative to the expensive and time-consuming conventional microbiological techniques.

Due to the multi-dimensional nature of the data generated from such analyses, the output needs to be coupled with a suitable statistical approach or machine learning algorithms before the results can be interpreted. Although these platform has been showing great potentials to accurately and quantitatively assess freshness profiles (Panagou, Mohareb et al. 2011) (Mohareb, Iriondo et al. 2015) and safety parameters as well as adulteration (Ropodi, Panagou et al. 2016), their dependence on advanced data mining and statistical algorithms made was the main challenge facing their practical implementation across the food production and supply chain.

In order to overcome these challenges, we have developed sorfML (http://elvis.misc.cranfield.ac.uk/SORF), a Web platform prototype compatible with outputs from 5 instrumental platforms (See Figure) which provides means for interactive data visualisation, multivariate analysis (Principal component analysis and hierarchical clustering), as well as the ability to use stored datasets to develop predictive models to estimate food quality. Currently, the platform provides users with means to upload their experimental datasets to the server, thanks to the truly generic MongoDB NoSQL database backend, and to develop classification and regression models to estimate quality parameters. Objectives: The aim of this PhD is to expand the existing sorfML platform into a cloud-enabled framework that supports real-time monitoring of food products throughout the production chain. In order to achieve this, a series of advanced portable sensory devices will be deployed to examine their suitability as “Connected devices” in predicting quality and safety indices for various food perishable food products. A series of machine learning and pattern recognition models will be developed and integrated within the cloud system. This includes Ordinary Least Squares, Stepwise Linear classification and regression, Principal Component regression, Partial Least Squares discriminant analysis, support vector machine, Random forests and k-Nearest Neighbours.

References: Mohareb, F., M. Iriondo, A. I. Doulgeraki, A. Van Hoek, H. Aarts, M. Cauchi and G.-J. E. Nychas (2015). "Identification of meat spoilage gene biomarkers in Pseudomonas putida using gene profiling." Food Control 57: 152-160.

Panagou, E. Z., F. R. Mohareb, A. A. Argyri, C. M. Bessant and G. J. Nychas (2011). "A comparison of artificial neural networks and partial least squares modelling for the rapid detection of the microbial spoilage of beef fillets based on Fourier transform infrared spectral fingerprints." Food Microbiol 28(4): 782-790.

Ropodi, A. I., E. Z. Panagou and G. J. E. Nychas (2016). "Data mining derived from food analyses using non-invasive/non-destructive analytical techniques; determination of food authenticity, quality & safety in tandem with computer science disciplines." Trends in Food Science & Technology 50: 11-25.

Student: Emma SimsPlease contact Dr Fady Mohareb (Cranfield), Senior Lecturer in Bioinformatics

Email: f.mohareb@cranfield.ac.uk Cranfield University Supervisors: Dr Fady Mohareb, Prof. Andrew Thompson Industrial partner: Centre for Crop Health and Protection (CHAP)

Background: The falling costs and the extremely high yield of genomic DNA sequencing data from next generation sequencing (NGS) technologies means that it is now routine to produce more than one billion sequencing reads within a few days[1]. This allows us to describe nearly all the sequence differences between hundreds of different plant lines[2,3]. But, to maximise the benefit from these rapid advances in NGS, we also need “next-generation phenotyping” to link genotypes and phenotypes—this allows us to understand which DNA differences cause a plant to look or respond differently. These differences can then be used by plant breeders to select genetic combinations that perform better in particular environments[4].

Cranfield University and AgriEPI Centre have recently acquired a state-of-the-art phenotypic platform installed within a purpose-built 300 m2 glasshouse facility as part of a £5.5m investment. This unique LemnaTec® multi-sensor platform moves in three dimensions within the partially environmentally controlled glasshouse, while the plants remain static in containers of up to 1 m3. It is designed to precisely monitor the growth and physiology of crops under a range of soil conditions and rootzone stresses such as salinity, drought and compaction using RGB, hyperspectral, fluorescence and thermal cameras and a 3D laser scanner. Objectives: In ongoing projects at Cranfield, the response of a population of tomato genotypes to various rootzone stresses (e.g. drought, compaction, salinity, pH) will be assessed using the Lemnatec platform, and the genotypes of these plants will be defined NGS.

The aim of this PhD is to develop a cloud-based platform, integrating NGS and phenotypic measures acquired via the LemnaTec platform. Due to huge size and heterogeneous nature of phenotypic and genotypic data being integrated, the developed platform will be coupled with a Big Data-compatible database backend (e.g. Hadoop, NoSQL). The database will host a variety of short and long read sequencing genomic (and possibly transcriptomic) data. The platform will be coupled with an interactive Web-based UI that allow the integration of phenotypic and genomic datasets by providing several data analysis pipelines for automating the variant calling and SNP identification as well crawling genomic and proteomic annotation from public databases. A series of mathematical models, including machine learning and pattern recognition models, will be developed to allow prediction of genotypic impacts on plant phenotypes.

References: [1]Metzker, M.L., Sequencing technologies - the next generation. Nat Rev Genet, 2010. 11(1): p. 31-46. [2]Koboldt, D.C., et al., The next-generation sequencing revolution and its impact on genomics. Cell, 2013. 155(1): p. 27-38. [3]Morozova, O. and M.A. Marra, Applications of next-generation sequencing technologies in functional genomics. Genomics, 2008. 92(5): p. 255-64. [4]Hennekam, R.C. and L.G. Biesecker, Next-generation sequencing demands next-generation phenotyping. Hum Mutat, 2012. 33(5): p. 884-6.

Student: Ewelina SowkaPlease contact Dr Fady Mohareb (Cranfield), Senior Lecturer in Bioinformatics

Email: f.mohareb@cranfield.ac.uk

Cranfield University Supervisors: Dr Fady Mohareb, Prof. Andrew Thompson

Industrial partner: AgriEpi Centre

Libya faces a serious problem from desertification, manifested through vegetation deterioration, settlement expansion, and an increase in saline lands. These effects are obvious to see in many parts of the country. It is therefore both important and timely to develop a tool which can assess the desertification threat, so as to allow policy makers to undertake the appropriate measures required to protect and reverse the consequences of desertification. This research concerns the development of an Early Warning System to combat desertification in Libya. A key theme to this research is to establish an understanding of the impact of desertification in the country, by developing an integrated model of early warning for the causes of desertification, used as a method to identify the affected areas, where efforts can be best directed to combat desertification.

The ultimate research aim is to develop an Early Warning System (EWS) accounting for the drivers of desertification in Libya, by employing an integrated monitoring model approach. In concrete terms, the study seeks to develop a suitable methodology to represent Libyan conditions, to help combat desertification and promote the sustainable use of natural resources. Key tasks include: the appraisal of existing thematic information available including soil condition, land cover, erosion hazards and climatic information for the region; a review and selection of data themes; appropriate identification of risk; provision to decision-makers of necessary and timely information concerning challenges associated with desertification, and; the development of a prototype framework for desertification assessment in Libya supporting a risk assessment approach, adopting standardised observation methods for long-term monitoring of desertification indicators.

The research develops a novel implementation of a methodology and prototype framework, and is applied successfully in an area of the world where such approaches have never before been undertaken. To achieve this, a MEDALUS-derived approach has been selected to provide an initial basis for a methodology suitable for monitoring desertification, in turn providing the foundation for an Early Warning System for desertification in Libya.

The MEDALUS methodology adopted, has been used to map Environmentally Sensitive Areas of desertification (ESAs), based on MEDALUS classes and parameter weightings, but in each case adapted to suit the conditions of the selected study area, the Jeffara Plain, in Libya. The final Environmentally Sensitive Areas (ESAs) used to assess desertification in the Jeffara Plain were created by combining five qualities, namely: climate, soil, vegetation, management and ground water quality.

A sensitivity analysis and map output comparison was conducted in order to analyse whether the influence of using different scores for parameter classes had an impact on the outputs. This approach permitted testing and evaluation of the modified scores developed within this research, being used to determine the degree to which model outputs were changed with respect to the standard MEDALUS scores. In particular this approach was applied to the vegetation quality parameters.

Production of the Environmentally Sensitivity Areas (ESAs) maps of desertification for the periods between 1986–2016 identified the trend of desertification that was used to model future change to the year 2030, revealing that the study area has a high sensitivity to desertification in the future.

The quantitative approach of ESAs used in assessing desertification provides an important basis for planning sustainable development programmes such as in the production of early warning systems for desertification. ESAs of desertification enable and demonstrate a clear vision of the risk state of desertification permitting quick actions to be planned.

Keywords: Desertification, early warning system, Environmentally Sensitive Areas, land degradation, mitigation

Student: Azalarib Ali

First supervisor: Dr Stephen Hallett

Supervisory panel: Dr Stephen Hallett; Tim Brewer

Natural rangelands are one of the significant pillars of support for the Libyan national economy. The total area of rangelands in Libya is c.13.3 million hectares. This resource plays an important role providing part of the food needs of the large numbers of grazing animals, in turn providing food for human consumption. In the eastern Libyan rangelands, vegetation cover has changed both qualitatively and quantitatively due to natural factors and human activity. This raises concerns about the sustainability of these resources. Observation methods at ground-based sites are widely used in studies assessing rangeland degradation in Libya. However, observations across the periods of time between the studies are often not integrated nor repeatable, making it difficult for rangeland managers to detect degradation consistently. The cost of such studies can be high in comparison to their accuracy and reliability, in terms of the time and resources required. These costs are not expected to encourage the local administrators of rangelands to make repeated or continuous observations in order to determine possible changes in managed areas. This has led to a lack of time-series data, and a lack of regularly updated information. The sustainability of rangelands requires effective management, which in turn is dependent upon accurate and timely monitoring data to support the assessment of rangeland deterioration.

The aim of this research has been to develop a framework for monitoring and evaluating rangeland condition in the east of Libya with a prediction of the future condition based on a historical assessment. This approach was achieved through the utilisation of medium resolution satellite imagery to classify vegetation cover using vegetation indices. A number of vegetation indices applied in arid and semi-arid rangelands similar to the study area were assessed using ground-based colour vertical photography (GBVP) methods to identify the most appropriate index for classifying percentage vegetation cover. The vegetation cover data were integrated with climate data, topography and soil erosion assessment using the RUSLE system to form a Rangeland Assessment Management Information System (RAMIS). These data were used to assess the historical and predicted future rangeland condition.

The MSAVI2 vegetation index was identified as the most appropriate index to map vegetation cover as this had good correlation with the ground data (R2 = 0.874). The RUSLE prediction identified that over 1,300,000 hectares were affected by soil loss over the time period from 1986 to 2010 representing nearly 97% of the study area. The RAMIS output indicated that most of the study area in 1986 was affected by a high risk of rangeland degradation, with less than 10 % of the area having a moderate and low-risk of degradation. The rangeland condition up to 2010 indicated a slight improvement in degradation distribution, with a slight decrease from 90% to 85% in the high risk of degradation area and the area having a low risk of degradation increasing from 2% to 8% in 2010. The result of the predictions made showed that the area of low cover class, which in 2017 reached about 1,280,000 hectares continues to increase through 2030 to 2050, to some 1,400,000 hectares, with a consequent increase in areas of high risk of rangeland degradation.

The result of implementing the RAMIS framework over the historical period illustrates changes in the rangeland condition, reflecting the fluctuation in the effectiveness of rangeland management development projects linked to the financial resources available in the 1980s, with increasing numbers of grazing animals exceeding the rangeland capacity and the expansion of rangeland cultivation. Libyan rangeland managers need to focus more on expanding the fenced area, conducting soil survey, and implementing soil erosion studies that can be used in erosion model calibration at a large scale to better inform rangeland management planning. Otherwise, the future projections of change up to 2050 indicate a continuance of the deterioration of rangeland condition, increasing the areas of low vegetation. However, this projection is based only on the vegetation data as the lack of available climate data did not permit its incorporation into the prediction.

Keywords: Libyan rangeland, land degradation indicators, RUSLE, rangeland comdition, rangeland management

Student: Abdul Al-Bukaris

First supervisor: Tim Brewer

Supervisory panel: Tim Brewer; Dr Stephen Hallett

The vegetation cover in Al Jabal Al Akhdar has been subjected to human and natural pressures that have contributed to the deterioration and shrinking of the vegetated area. Therefore, the principle goal of this dissertation was to establish and evaluate the changes in the natural vegetation of the Al Jabal Al Akhdar region in the period following the 2011 Libyan uprising. The thesis is comprised of three main objectives; the first is to provide a quantitative assessment of changes in natural vegetation cover over a period from 2004-2016, and identify the consequent impact of human activity; the second is to investigate the impact of climate on the natural vegetation cover; and the third objective is to evaluate the ability of machine learning techniques to predict the natural vegetation cover types.

GIS and remote sensing techniques and data have been used to achieve these objectives, along with the ancillary data, across 53 sites in the area of interest. Six classified Landsat image scenes have been used for undertaking a post-classification comparison approach to detect the changes and the types of changes, with ENVI, ArcGIS, and MS-Excel software and programme scripts used to detect LULC changes and determine human activities impact, and statistical analyses between MODIS NDVI and climate satellite-based data. Statistical analyses have been undertaken using SPPS and MS-Excel software. Lastly the machine learning ‘J48’ algorithm, within the WEKA tool, was applied on ANDVI, climate data, and spatial characteristics for 53 sites and analysed statistically to test its ability to predict the natural vegetation type.

The main research findings have confirmed that from 2004-2016, natural forest and rangelands decreased by 71,543 ha or 7.10% of the total area of interest as a result of urbanisation and agricultural expansion. Human activities have had more impact than climate impact on LULC changes. The machine learning classifier decision tree ‘J48’ algorithm was also found to have the ability to classify and predict the natural vegetation cover type.

Finally, an evaluation was undertaken of the current distribution of natural vegetation cover, and a forecast of future changes, utilising high-resolution imagery. A conclusion considers how developing action plans using tools such as those described to manage and protect the natural vegetation cover are highly recommended.

Keywords: Post-classification comparison, Land use cover change, J48 algorithm, MODIS NDVI, Urbanisation, Al Jabal Al Akhdar

Student: Nagat Al Mesmari

First supervisor: Dr Stephen Hallett

Supervisory panel: Dr Stephen Hallett; Dr Rob Simmons

Newcastle University

Projects running at Newcastle University. For further details contact Professor Stuart Barr.

The project will address a current national issue of evaluating flood risk from multiple sources (fluvial, pluvial, and groundwater), something that has become evident in the extensive flooding over the past 10 years (e.g. summer flooding in 2007, and winter flooding in 2013/14). Existing computer modelling techniques are designed to handle each type of flooding separately. This study will integrate existing state-of-the-art models to provide a novel modelling capability, which will be used with new data on high-intensity rainfall patterns. Following model integration and testing for a range of case study sites across the UK, the integrated model will be used with rainfall data representing current and possible future climates to assess the enhanced flood risk arising from multiple sources, providing an improved basis for flood management in the UK and a methodology that can be applied internationally.

Depending on the background of the student, training will be provided through formal courses in hydrogeology, hydrology, hydraulics, modelling, climate change, statistics, and programming. The student would expect to gain skills in fundamental modelling techniques, as well as experience in current issues in flood risk assessment.

Student: Ben SmithFirst supervisor: Dr Geoffrey Parkin Email: geoff.parkin@ncl.ac.uk

Telephone: +44 (0) 191 208 6618

Commenced: October 2015

Supervisory panel: Newcastle Dr Geoffrey Parkin; Professor Hayley Fowler

Infrastructure systems (energy, transport, water, waste and telecoms) globally face serious challenges. Analysis in the UK and elsewhere identifies significant vulnerabilities, capacity limitations and assets nearing the end of their useful life. Against this backdrop, policy makers internationally have recognised the urgent need to de-carbonise infrastructure, to respond to changes in demographic, social and life style preferences, and to build resilience to intensifying impacts of climate change.

This PhD will draw on advances in (i) methods for broad scale infrastructure risk analysis, (ii) readily available datasets describing global climate and associated hazards, global exposure, and increasingly information on the location of key infrastructure networks, and, (iii) 'big data' processing and cloud computing techniques, to enable the first global infrastructure risk analysis.

The research will develop an integrated model that uses data from global mapping sources such as Google, Open Streetmap; i-COOL global marine networks (port flooding); CAA (airport flooding); global flood hazard maps (WRI: floods.wri.org) and climate model outputs (climateprediction.net); population location (Global Rural Urban Mapping of Project) to look at future risks.

The project will develop an integrated assessment model of global transport networks, where the importance of major infrastructure network components are assessed based upon population served, information on route type (e.g. main, secondary road etc.), other published information (e.g. route frequency for airlines) and so on. This information, integrated with hazard extents, will provide a unique global risk assessment.

The size of the spatial datasets necessitates a cloud or distributed computing approach to handle and process the data. Web-enabled tools will be developed and the integrated framework for managing the workflow of these web-based tools will be designed with extensibility in mind to enable other researchers to augment the model as new data and capabilities become available.

Student: Feargus McCleanFirst supervisor: Professor Richard Dawson

Email: richard.dawson@newcastle.ac.uk

Telephone: +44 (0) 191 208 6618

Commenced: October 2015

Supervisory panel: Newcastle Professor Richard Dawson; Dr Sarah Dunn; Dr Hiro Yamazaki; Dr Sean Wilkinson

Remote sensing provides the mechanism for forewarning of risks of potential loss of life due to water failure and flooding through monitoring of reservoir and lake levels and river discharge. Further, many river catchments are managed with dams constructed for hydroelectric power, fisheries and water resources and these dams often have a detrimental effect on livelihoods, particularly downstream while river discharge across major catchments suffer from either lack of gauge data or data unavailability. With precipitation data lacking in many parts of the world, information concerning water failure or flood events is often not communicated downstream with potentially catastrophic consequences.

Near real-time quantification of lake/reservoir levels and volumes and river stage heights and discharge can be recovered from satellite altimetry, an estimation of the lake area or river width from near real-time satellite imagery and some mechanism to develop a stage-discharge relationship perhaps based on a single gauge data or hydrological modelling. This project will develop a near-real time capability for the latest delay-doppler type of altimeter (carried onboard Cryosat-2 and the soon to be launched Sentinel3 satellites) and optical and/or Synthetic Aperture Radar imagery for river and reservoir/lack extent. The river mask will be used to both constrain the satellite altimetry to the inland water target but also supply river width ad lake extent for inferences of variations in discharge and lake volume.

Such a capability will reduce the risk associated with water failure and floods providing an early warning with time lapse of less than 24 hours limited by the time that the quick-look satellite altimetric waveforms are made available to the user.

Student: Miles ClementFirst supervisor: Professor Philip Moore

Email: philip.moore@ncl.ac.uk

Telephone: +44 (0) 191 208 5704 Commenced: October 2015 Supervisory panel: Newcastle Professor Philip Moore; Professor Chris Kilsby; Professor Philippa Berry

Spatial risk assessment cannot be considered in isolation from other factors such as the development of long term sustainable plans for land use development and the implementation of planning decisions that mitigate adverse climate change impacts such as increased heat. To date however, the ability to develop spatial, multi-objective risk and sustainability planning tools has been limited by intractable computational run-times. Cloud computing now offers the potential to overcome this limitation, facilitating the development of spatial optimisation risk and sustainability planning tools that allow a large number of risk and sustainability objectives to be considered in the production of optimised spatial development plans.

In this PhD, cloud computing will be employed to provide the next generation of multi-objective, spatial risk and sustainability development plans for the UK. Using nationally available data-sets on climate related hazards, such as probabilistic predictions of heat, pluvial, fluvial and storm surge related flooding, along with future predictions of population demographics, the PhD will investigate how temporal spatial plans can be developed that minimise exposure to future spatial risk whilst maximising key local, regional and national sustainability objectives (e.g., minimising overcrowding, reducing urban sprawl, maximising access to low emission public transport etc.).

During the PhD, cloud computing training will be provided via a number of the Newcastle EPSRC CDT Big Data & Cloud Computing modules.

Student: Grant TregonningFirst supervisor: Dr Stuart Barr

Email: stuart.barr@ncl.ac.uk

Telephone: +44 (0) 191 208 6449

Commenced: October 2016

Supervisory panel: Dr Stuart Barr (Newcastle); Professor Richard Dawson (Newcastle); Dr Raj Ranjan (Newcastle)

The Gravity Recovery and Circulation Explorer (GRACE) mission, a tandem satellite pair, was launched in 2002 and has provided unprecedented insight into mass change including polar ice mass, glaciation, hydrology and earthquakes. The novel aspect is the inter-satellite microwave ranging device that is accurate to a few microns and is highly sensitive to the differential pull from gravity on the two satellites. Solutions for gravity field snapshots are made every 10 days to a month with the differences revealing mass change associated with surface processes once longer term trends such as Glacial Isostatic Adjustment (GIA) are allowed for. Of the solution approaches the mass concentration (mascon) method has been established as superior to spherical harmonics. At Newcastle 2 degree mascons over successive 10 day periods form the basis of time series of mass change in our current studies. The mascons are constrained geographically over Antarctica, Greenland, land and the oceans to enable a solution to be obtained for over 10,000 masons globally. The usage of 2 degree masons is a limiting factor and needs to be refined for basin-scale change detection, particularly in delineating the basin boundaries.

The GRACE mission is near the end of its operational lifetime but is still providing data. The uniqueness and scientific value of the mission has led to a GRACE Follow-on (FO) mission scheduled for launch in December 2017/January 2018. The GRACE FO mission has the same inter-satellite K band microwave device for providing range-rate measurements but will also carry a laser interferometer on each satellite to provide range and directional data. Although experimental and not continuously operational, when available the laser data will be an enhancement over the microwave data.

Student: Jerome RichmondFirst supervisor: Professor Philip Moore (Newcastle):

Telephone: +44 (0) 191 208 5040

Email: philip.moore@ncl.ac.uk Supervisory panel: Professor Philip Moore (Newcastle); Dr Ciprian Spatar (Newcastle)

Data collection systems for the Internet of Things (IoT) derived from pervasive sensors are being deployed in many cities across the UK including Newcastle. These systems produce significant data volumes for real-time computation and analysis of historical data such as data mining. Cloud computing is the cutting-edge computation platform that offers nearly unlimited computation resources through cloud datacentres (CDC). In addition to cloud, a new type of computing resource, an Edge datacentre (EDC), offering computing and storage on a smaller scale than a CDC, and positioned closer to data sources or sinks. It can provide immediate analysis of streaming data, and better support for real-time decision making in latency-sensitive workflows, leaving CDCs to deal with intensive computing and large-scale storage. EDCs has the following benefits: (i) energy saving for the resource constrained edge devices which, currently, primarily upload data to the CDC; (ii) reduced network congestion by filtering non-relevant events at the edge; and (iii) reduction in latency for event detection as sensors no longer need to send data to far off CDCs.

Data analytics requires data analysis while it is shared across multiple parties. For example, in a smart hospital, data IoT gathered through sensors stream will be shared via all participants including doctors, patients, data analysts, emergency departments and government auditors / law enforcers. These data need to be shared with the participants to a certain degree in real-time along with the patient record database, where privacy-preserving techniques such as anonymization and permutation need to be applied before sharing. Current privacy-preserving data publishing technologies enable data anonymization via generalisation/specialisation on data records. However, current research for PPDP offers limited support for cloud and IoT, whereas the performance and scalability of the data anonymization/permutation algorithms are not satisfactory under a hybrid cloud environment with CDC/EDC/private cloud and distributed programming models such as mapReduce.

This project will focus on designing advanced data privacy preserving tools and methodologies to efficiently protect the key information included in dynamic and large-scale IoT sensor data, based on the existing data anonymization algorithms. The main outcomes of this project will include a framework for securely processing and sharing of heterogeneous datasets under the specification of law and user privacy requirements.

This project aligns mainly with the call themes of “Security and legal issues for handling data and information as it relates to risk management”. This proposal combines the disciplines of Engineering and Computer Science/Informatics to understand and morel the requirements from users and laws, and develop privacy-preserving tools for the shared data analysis and risk evaluation in urban data.

Many cities in the UK and abroad are attempting to move forward the smart cities agenda through urban sensing programmes. These programmes typically involve the deployment of low cost environmental sensors measuring climate air quality and people movement, along with other metrics. Whilst current data volumes are relatively small, over the next few years these pervasive sensors (and other platforms such as smartphone enabled sensors) have the potential to provide enormous quantities of streaming data. The cloud provides a means to process these large volumes using an on-demand model that potentially reduces the overall cost of investment in data storage and processing requirements within a city. In Newcastle as part of the Science Central programme is an investment is £500k for sensor hardware deployed around the city and a further investment in a fully instrumented building due to open in 2017. These data streams will provide a platform for developing an integrated framework for developing workflows integrating urban observational and static data for urban risk analysis across spatial and temporal scales, where a new layer of information security and data protection is essential.

Understanding and forecasting rainfall, especially from thunderstorms, and the consequent risk of flooding in cities is a long standing and important problem in both practical and research terms. |Rainfall radar is our most powerful observational tool giving real time spatial images of rainfall, but unfortunately has major issues with accuracy and reliability. There are many sources of error in the calibration and interpretation of radar data, as the return signal can be the product of reflection from multiple rainfall targets, buildings and atmospheric phenomena.

This project will take a fresh view of making better use of radar data, using new mathematical and statistical approaches, empowered by Big Data computational methods. A new high resolution rainfall radar is operating in Newcastle for use in flood risk studies in combination with a dense telemetered rain gauge network across the city and surrounding area. This project will develop and apply new statistical simulation approaches to using the radar and rain gauge data effectively, recognising the usefulness and importance of both the space and time properties of the rainfall fields, together with large volumes of data from ground-based rain gauges.

The student will undertake a varied research programme, which could include: meteorology of rainfall, physics of radar signals, statistics of time series and spatial dependence of (pseudo-)random fields, mathematics of conditional simulation, and use of Cloud technology for data processing and computational simulation. Finally, the student will liaise with operational engineers and designers in the best use of their results in real time and long term assessments of flood risk and intervention in cities.

Please contact Professor Chris Kilsby (Newcastle):Email: chris.kilsby@ncl.ac.uk

Telephone: +44 (0) 191 208 5614

Supervisory panel: Professor Chris Kilsby (Newcastle); Professor Andras Bardossy (Newcastle)

Industrial partner: Mark Dutton (Environmental Measurements Ltd. - EML)

This project makes use of the increasing interest and technological developments in ‘citizen-science’ environmental observations, and aims to bring together national scale hydroclimatic data from formal observation networks with observations made by individuals and communities, to improve risk assessments related to flash flooding. Our recent research in Africa (Walker et al., 2016) and in the UK (Starkey et al., in review) has demonstrated both that good quality information can be provided by citizen observers, and the value of community-based observations in modelling catchment response from intense localized convective rainfall events, which were not picked up by formal raingauges or radar coverage.

The Met Office have recently upgraded a web-based platform, WOW (Weather Observations Website), designed to collect, store, and disseminate observations by the growing weather observation community in the UK. It is available for use worldwide, and currently holds over 900 million observations recorded since 2011 from over 10,000 sites globally. In the UK, there are currently 3,100 active weather observing sites reporting a range of parameters including in many cases rainfall, of which 318 are formal Met Office sites. . There are also around 1,600 formal rainfall observation sites in the UK (monitored by the Met Office, Environment Agency (EA), Natural Resources Wales (NRW) and Scottish Environmental Protection Agency (SEPA)). The citizen-based observations therefore represent a significant additional resource which at present has neither been systematically analysed nor protocols developed for its use in the assessment or mitigation of risk from flooding.

The student will address this research gap, working within a strong research team at Newcastle University, linking with current research projects on development of sub-daily precipitation datasets (INTENSE), and assessment of flood risk in catchments and urban areas (SINATRA and TENDERLY, part of the NERC Flooding From Intense Rainfall programme). The project will assess the value of citizen observations in improving spatial rainfall estimation during extreme events, and the subsequent impact on flood risk assessment.

References:

Starkey, E, Parkin, G, Birkinshaw, SJ, Quinn, PF, Large, ARG, and Gibson, C (in review). Demonstrating the value of community-based (‘citizen science’) observations for catchment modelling and characterisation. Journal of Hydrology.

Walker D, Forsythe N, Parkin G, Gowing J (2016). Filling the observational void; scientific value and quantitative validation of hydro-meteorological data from a community-based monitoring programme in highland Ethiopia. Journal of Hydrology 538, 713–725.

Blenkinsop, S, Lewis, E, Chan, SC, Fowler, HJ (2016). Quality-control of an hourly rainfall dataset and climatology of extremes for the UK. Int. J. Climatol. DOI: 10.1002/joc.4735

Approaches and tools for the identification of sources of risks, their drivers and impacts within complex systems; the project will use multiple sources of data (formal monitoring networks, citizen observations, and radar) to improve the understanding and characterisation of localised intense rainfall, and will use hydrological and hydrodynamic models to assess their impact on flood risk.

Robust methods to quantify and analyse risks and their drivers including sound mathematical and statistical approaches; approaches have been developed under the Intense project for data Quality Control, and temporal and spatial interpolation (Blenkinsop et al., 2016); these will be used as the basis for analysing the use of citizen observations particularly focussing on extreme events.

Tools for developing, managing and analysing ‘Big Data’, to understand risk better, and to apply modern cloud computing approaches; the project will require cloud computing approaches to firstly analyse large national high-temporal-resolution (hourly or sub-hourly) rainfall datasets, and secondly to run hydrological and hydrodynamic impact models to assess uncertainty reduction for flood impacts arising from the enhanced datasets.

Utilisation of multiple models and integrated modelling (for multi-hazard modelling and to combine environmental hazard models with information about vulnerabilities/exposure of a population); the study will consider flooding from intense rainfall, as fluvial flooding in rapid response catchments and as direct pluvial (surface water) flooding. This will involve a combination of catchment hydrological models and hydrodynamic flood models to assess flood risk from these sources.

Measurement, characterisation and handling of uncertainty, including within model chains; the study will assess the value of local citizen or community-based monitoring of rainfall and river levels in reducing uncertainty of flood risk assessment.

New approaches to visualise and communicate risk, including the public, to enable decision-making; a key benefit of developing improved real-time flood risk assessment using citizen observations is to help inform communities in rapid response catchments or outside main flood plains that are not part of the national EA flood warning service. The Tenderly project will work with a selection of pilot communities to assess their use of rainfall and flood risk information; availability of appropriate information suitably communicated is an important part of motivation for effective long-term monitoring. This study will build on these pilot sites, producing relevant risk information using national data.

The risk of flooding, and particularly flash flooding, appears to be rising and heavy rainfall and flooding is expected to increase with global warming. Understanding how intense rainfall patterns might change, both temporally and spatially, is important for understanding potential changes to future flood risk. However, current formal rainfall observation networks are scarce spatially, especially at sub-daily scales. The growth of technology and big data has, however, allowed individual citizens to make their own measurements and the number of these far surpass those of formal observations. There is great potential for these to be used to supplement formal observations in space and time to better understand flood risk.

Citizen science observations present an opportunity to improve the assessment of flood risk. However, we are not sure of the uncertainties associated with such observations and how they can be best used to supplement the existing formal observational networks. This project seeks to identify and quantify these uncertainties and develop new methods for using these informal observations in flood risk assessment.

The overall aim of the project is to develop and test methodologies for using citizen observations in flood risk assessments in the UK.

This project is about designing the visualisations and maps produced from complex risk based models more effective. Stakeholders can then easily understand the information and data presented to them, allowing decisions can be made.

Humans are only able to process a certain about of information. It is about understanding this quantity but also understanding the background knowledge, experience and perception of the user and how this alters what is ‘seen’, what is ‘understood’ and what is ‘knowledge’.

University of Cambridge

Projects running at University of Cambridge. For further details contact Professor Tom Spencer.

The H1N1 pandemic in 2009 has been the catalyst which has shown how important locally based human observers are for outbreak detection, verification and pinpoint geocoding. Given the limited resources of public health agencies, the paramount challenge now is to harness the power of Web-based reports. Global bio-surveillance systems such as GPHIN, MediSys, BioCaster and HealthMap trawl through high volume, high velocity news reports in real time and are now seen as adding substantial value to the efforts of national and transnational public health agencies. With concerns about the rapid spread of newly emerging diseases such as A(H5N1), re-emerging diseases such as Ebola and the threat of bioterrorism there has been increasing attention on bio-surveillance systems which can complement traditional indicator networks by detecting events on a global scale so that they can be acted on close to source. The goal of this research project (‘Panda Alert’) is to produce a robust computational model that understands unstructured natural language signals in online news media reports for early risk alerting of adverse health events. Key beneficiaries will be the public health and animal health surveillance communities. The project will build on substantial groundwork already done in the BioCaster project (2006-2012, PI: Collier) and inter-disciplinary collaborations with the international public health community.

The project will involve inter-disciplinary collaboration with experts at the Joint Research Centre (JRC) at Ispra (Institute for the Protection and Security of the Citizen) who provide the European Commission’s MediSys bio-surveillance system. The JRC group will provide (1) expert background information into the context of biomedical surveillance, (2) an introduction to the state of the art techniques used in Medisys, (3) guidance on the selection of real world agency interest for analysis, (4) feedback on system performance.

The Panda Alert project is aligned with DREAM themes in several areas:

1. Approaches and tools for the identification of sources of risks, their drivers and impacts within complex systems.

Previous research by the lead supervisor in the BioCaster project (2006-2012) pioneered the use of time series analysis for alerting epidemics at the country level (e.g. using CDC’s Early Aberration and Reporting method for syndromic surveillance) using hand crafted features mined from news media reports. Similarly JRC’s MediSys pioneered Boolean rule alerting and Harvard University’s HealthMap team has recently applied Incidence Decay and Exponential Adjustment to project growth of outbreaks based on news data. Panda Alert proposes to improve the state of the art in health risk detection by incorporating richer and more complex features into the language models than features which are known a priori to indicate risk to human health (e.g. see the International Health Regulations (IHR) 2005 Annex B decision instrument).

2. Tools for developing, managing and analyzing ‘Big Data’, to understand risk better, and to apply modern cloud computing approaches.

As noted above, our methods will develop the use of statistical machine learning on natural language texts (e.g. recursive neural networks, kernel methods) to classify news reports for public health risk. All tools and data sets from this research will be available for download from public repositories such as GitHub and Zenodo. The software where appropriate will be cloud ready and tested on our own group HPC cluster (SLURM middleware, currently 32 cores, 512 GB RAM expanding soon to 48 cores, 756 GB RAM). The techniques will be tested on real-world high volume, high veracity data in the open source GDELT data set. We estimate that real-time analysis will have to scale to handle approximately 300,000 news items per day in >40 languages with surge capacity during epidemics such as AH1N1 (2009) or SARS (2002). An integral part of the project will be to develop a fully functioning system for real-time alerting and mapping of risk based on the new techniques.

3. New tools and approaches to multi-hazard assessment and interconnected risks (cascade effect).

The use of distributed semantic representations for event reporting brings with it the powerful possibility to generate compositional representations that can be tested for alerting severity. We propose to explore the space of distributed semantic compositions, bounded within a time window, in order to find potential interconnected risks.

Epidemics such as SARS, Ebola and pandemic influenza threaten lives and livelihoods across the globe. Based on the PI’s nine years of experience in epidemic intelligence from social sensors the goal of this project is to build a high quality risk alerting system using distributed semantic representations of news about public health hazards. The PI’s previously reported experiments against human standards have shown that health news is effective at outbreak event detection (F1=0.56) measured against ProMed-mail alerts. The resulting BioCaster system was in regular use by national and international public health agencies such as the World Health Organization. This project aims to build on this legacy by investigating novel methods for event alerting using the latest advances in natural language processing technology by groups at Stanford University (PI: C. Manning) and McGill University (PI: Y. Bengio), i.e. in distributed semantic feature representations and deep learning.